Learning Objectives

- Learn strategies for aligning information technology, operational technology and business objectives.

- Recognize cybersecurity risks and how to mitigate them.

- Consider how integrated high-performance buildings support effective pandemic response.

Traditionally, information technology supported business and had minimal interaction with operational technology. Today, IT leadership and knowledge are needed to help organizations bridge the gap between IT and OT so stakeholders can assess and analyze facility data. This often leads IT to take ownership of everything that touches the network, even when the IT professionals don’t understand “internet of things” devices.

A more effective approach, known as corporate integration, strives to align IT, OT and business objectives. In corporate integration, business leaders determine what data are needed to achieve strategic objectives. IT managers make the data meaningful and accessible and OT managers offer knowledge of the applicable equipment. Advance planning, clear communication and ongoing coordination are essential.

A desire for greater energy efficiency and green buildings has been driving the evolution of high-performance buildings since the 1970s. The Energy Independence and Security Act of 2007 accelerated this process by expanding the high-performance building concept to include the integration and optimization of all high-performance attributes on a life cycle basis. There are five categories to consider when evaluating the connectivity of IT/OT systems: sustainability, cost-effectiveness, security, productivity and functionality.

To support integration across these categories, IT and OT systems must be connected. Originally, building systems used vendor-specific proprietary communication protocols. As technology advanced, more automation products and solutions became available on the market. Occupant and owner expectations drove the development of new processes and data sets, such as human resources; scheduling software; heating, ventilation and air conditioning; fire control; lighting controls; and other automation systems. With so many choices, facility managers didn’t want to be tied to the products of a specific manufacturer.

Over time, the rise of competing open protocols like BACnet, LonWorks and Modbus caused a market shift from a proprietary OT architecture to an open, interoperable OT architecture. Now, facilities rely primarily on internet protocol-based communication.

High-performance building benefits

While achieving integration and interoperability in high-performance buildings can be challenging, a secure and effective networked system offers many benefits. The onboarding of third-party applications becomes more efficient. Fault detection and diagnosis tools, geographic information systems, computerized maintenance management systems and operational, energy and predictive analytics can be made available faster and more easily.

The ability to track performance data and maintenance records across an integrated enterprise allows you to see that assets are available when needed, minimize the risk of asset failure and maximize the return on those assets. Similarly, an enterprisewide view of environmental conditions lets building owners and facility managers optimize temperature, lighting and space use for comfort, convenience and productivity.

Networked systems also facilitate change management by simplifying the onboarding of new employees and projects. Centralized access to critical asset data is especially beneficial for facilities facing the loss of experienced employees and their undocumented knowledge.

Additionally, networked systems support effective capital planning and economic risk management. Big data can be used to monitor wear and tear on equipment, budget for maintenance and plan for replacement. This is true for any asset and particularly valuable for those with 10- or 20-year life cycles, which can be difficult to monitor without digitization.

Cybersecurity concerns

Networking building automation systems offers many benefits, including giving an approved third-party service provider remote access so it can identify and address problems and providing flexibility to optimize or modify operations to increase efficiency and decrease costs. But increasing connectivity and data transfer can also increase security risks.

Cyberattackers are experts in exploiting known vulnerabilities to steal data, disrupt operations and jeopardize the safety of property and people. In recent high-profile security breaches, attackers have used remote access privileges to gain entry to financial systems that house customer data. Other threats, such as taking control of OT systems to manipulate HVAC, fire control or other systems, are less visible but potentially more dangerous.

To reduce these risks, some building owners and operators have taken the extreme approach of isolating their networks or canceling planned integration projects. This response is understandable, but it diminishes their ability to take advantage of applications developed to realize return on investment in high-performance building assets. Fortunately, it is possible to reap the benefits of integration while also providing data security.

The best way to do this is to begin with the end goal in mind. To mitigate cybersecurity risks, design engineers should include coordination of the vendors and IT professionals, ensuring IT is aware of what each party is trying to accomplish, developing protocols/procedures around outside parties that have remote access.

High-performance building objectives

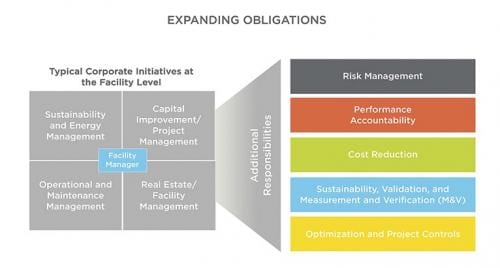

At the highest level, the goal of any integration project is the secure transfer of data needed to accomplish business objectives. To achieve that goal, facility managers need to understand their stakeholders and the stakeholders’ objectives.

In most organizations, the networked system will be used by many managers:

- The facility manager is responsible for seeing that the security, maintenance and services of work facilities meet the needs of the organization and its employees.

- The energy manager audits the energy efficiency of the facility, identifies areas of energy waste and develops and measure outcomes of energy conservation projects.

- The sustainability manager develops and manages corporate sustainability programs, procures sustainable acquisitions and evaluates and improves existing processes.

- The real estate manager oversees an organization’s real estate portfolio, supporting efficient, safe and cost-effective operations and space use of all facilities.

- The IT manager is responsible for the supervision, implementation, security and maintenance of an organization’s computer and network environment. IT managers are also responsible for working with the other departments to onboard, manage and maintain third-party applications, which may be hosted either on-premises or on the cloud.

Successful integration projects start by consulting with these leaders to define their specific needs. This requires developing detailed answers to a number of questions, including:

- What business benefits does the manager expect the networked system to provide?

- Are the existing systems generating the data needed to realize these benefits?

- If not, what types of data are missing? What systems are needed to add to generate this data?

- Can all of these systems communicate effectively and securely?

- Are all relevant parties receiving the data they need?

- How much flexibility do managers need to build into the system to accommodate building changes?

Taking time to answer these questions helps designers and building owners identify “must have” data and features, develop measurable goals and anticipate challenges. The next step is to define the enterprise relative to objectives.

Facilitate IT/OT cooperation

Implementing a comprehensive data solution requires integrating the energy, facility and business domains of an organization. Each domain uses different technologies, performance indicators and skill sets as described below:

- Facility domain. The facility domain encompasses all systems that capture data related to the equipment, amenities and other physical assets of a high-performance building. This includes data regarding the operation, access and service of systems such as HVAC, lighting, physical security, utility metering and elevators.

- Energy domain. This domain consists of systems related to recognizing or being able to capture the energy performance of a facility. This involves measuring upstream consumption through electricity, natural gas and water metering. It also includes submetering the downstream systems that consume that energy, such as air conditioning, heating and toilets.

- Business domain. The business domain contains all systems related to capital planning, including those that allow an owner to use asset performance data, understand asset health and make informed financial decisions. This domain also includes data systems related to space use and the allocation of associated costs. It could also include integrating the systems that impact the occupant: room booking, digital signage, knowledge management, etc.

Integrating these three domains is a complex process that begins with defining the IT/OT workflow necessary to achieve overall interoperability goals. As part of this process:

- Define what type of data needs to be generated.

- Identify where various data currently reside.

- Put systems in place to generate any additional data needed.

- Specify how the relevant systems will communicate.

- See that data can be transferred securely where it is needed.

It is important to see that IT and OT are on the same page early in this process. IT managers are often the first to recognize the potential of networked building systems. They have the knowledge and experience needed to make data meaningful and accessible, as well as to implement effective cybersecurity protocols. They lack detailed knowledge of the equipment that needs to be connected, however.

To achieve the organization’s networking goals, IT needs to support and be involved with the implementation of OT. However, simply understanding each other’s needs and limitations often proves difficult. This is especially true on facility integration projects because these smart devices are foreign to IT and require knowledge and experience across electrical, mechanical, cybersecurity and critical infrastructure systems.

Ideally, every project team would include multidisciplinary OT personnel with knowledge and experience in both OT and IT, but this combined skill set can be hard to find. When such multidisciplinary backgrounds and skills aren’t available in-house, a third-party expert can be a valuable addition to the team.

Ultimately, the data flow must be architected in a way that is secure and efficient, while also allowing third-party service providers remote access. Working together, IT and OT can recommend and implement best practices for secure network design. Typically, this will include hardening of systems, detection and protection against malware and ransomware, regular patching and other cybersecurity hygiene, continuous vulnerability management, proper segregation of IT and OT systems and use of demilitarized zones.

Data modeling in high-performance buildings

System integration makes it possible to generate the operational data that managers need to achieve their sustainability goals. Many companies assume if they have data, they can do an effective analysis. But few companies have reliable access to the high-quality data that is valuable at the point of decision-making.

Some operational data available today is low quality. Data entry errors, lack of business rules defining acceptable data values and problems related to migrating data from one system to another result in malformed, duplicative and missing data. For example, the thermostats in a building may be programmed to read “room temperature,” while the algorithm used to monitor temperature is programmed to search for “zone temp.” Therefore, any attempts to analyze temperature are unsuccessful.

To be valuable, operational data needs to be carefully managed from creation through extraction, translation and consumption. Data modeling is an important aspect of this process. Data modeling involves defining data entities (the objects tracked), their attributes and their relationships. The first step is to give the data meaning.

Because of the vast quantity of data being generated, establishing and enforcing a consistent tagging system can be challenging. Open source initiatives like Project Haystack streamline working with internet of things data by standardizing semantic data models. Working within Project Haystack naming conventions and taxonomies eliminates the need to maintain rigid schemas.

Next, asset classification standards allows the team to define building elements as major components common to most buildings. Subject matter experts can provide common threads linking activities, costs and participants in a building project from initial planning through operations, maintenance and disposal. UniFormat, OmniClass and MasterFormat are three widely used classification systems for the construction industry. Modeling operational data in this way allows you to normalize the data so that many systems can onboard it effectively and efficiently.

The networking of building automation systems and other assets makes it possible for facility managers to achieve their sustainability goals across a variety of high-performance attributes. However, network integration introduces potential cybersecurity risks.

By carefully specifying how systems will be connected and data will be used, managers can anticipate risks and design appropriate cybersecurity measures into networked systems. With advance planning and open communication, they can feel confident about the safe and reliable operations of high-performance buildings, as well as the safety and productivity of the people who work in them.

RELATED ARTICLES

Four ways to sharpen the technology that runs buildings in 2020

https://www.industrialcybersecuritypulse.com/four-ways-to-sharpen-the-technology-that-runs-buildings-in-2020/

How to get started designing a smart building

https://www.industrialcybersecuritypulse.com/how-to-get-started-designing-a-smart-building/

Future-proof health care facilities with wireless lighting controls

https://www.industrialcybersecuritypulse.com/future-proof-health-care-facilities-with-wireless-lighting-controls/

Original content can be found at Consulting - Specifying Engineer.

Do you have experience and expertise with the topics mentioned in this article? You should consider contributing content to our CFE Media editorial team and getting the recognition you and your company deserve. Click here to start this process.